Study: How effective are software testing methods on MOUs?

Verification methods reviewed based on performance in other industries; code inspections found to have highest potential effectiveness

By David N. Card, DNV GL – Approval Centre Korea

“Buying software is much like buying food from a street vendor.” – Jeff Voas (1999)

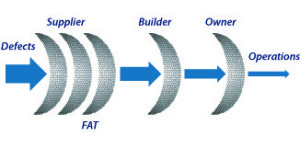

This comment was made in reference to software packages or components, as contrasted with custom software. Mobile offshore units (MOUs) are constructed using a component-based approach. This reduces the price to the vessel owner but obscures the quality status of the product, much as is the case with street food – the purchaser doesn’t know what ingredients are used or what quality assurance was applied during preparation. MOU owners generally do not have insight into supplier software verification activities prior to factory acceptance testing (FAT).

Testing and verification of MOU control systems can be extensive, conducted separately by the supplier, builder and owner. Investment in verification seems adequate, but is it expended in the best way? Little data on the efficiency or effectiveness of verification methods have been collected in the MOU industry. Moreover, new methods are being introduced to test increasingly complex systems. Examples are hardware-in-the-loop (HIL) testing and integrated software dependent systems (ISDS).

DNV GL and industry partners began an effort in 2013 to review the effectiveness of verification methods used in MOUs based on data about the methods’ performance from other industries. The immediate objective was to help projects develop more effective testing strategies. As Figure 1 suggests, software testing is like a set of filters that capture defects. If all the filters capture the same defects, then others may slip through. An effective testing process requires multiple filters to capture the full range of defects, while minimizing redundant testing.

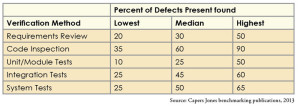

According to usual practice, neither the owner, the builder nor the classification society have insight into software verification performed by the supplier prior to FAT. Moreover, many purchasers of software do not realize the inefficiencies of most individual software verification methods. Table 1 shows the effectiveness of common verification methods.

Note that integration and system tests, the kinds of tests usually conducted in preparation for and during FAT, find only about 50% of defects each. If system and integration tests are performed in series, we expect that approximately 75% of defects will be found. (If the first test finds 50% of defects present, then 50% remain to be found. If the second test finds 50% of those, then 75% of all defects present have been detected, assuming no new defects have been inserted.) However, the percentage of defects found can range from 37% to 90% depending on how rigorously the testing was conducted. Multiple verification methods are needed to realize dependable software.

One result that has been repeated in many scientific studies is that code inspections have the highest potential effectiveness. A code inspection is a rigorous and systematic peer review. A peer review is a review of an artifact (document or code) by experienced and knowledgeable staff who are not the original authors of the artifact. Peer reviews can also be applied to any type of software or document, and they require no specialized test equipment. Nevertheless, peer reviews are not practiced rigorously or consistently among offshore software suppliers.

The typical MOU customer has little insight into what verification was performed or how effective it is. This study attempts to address the question of effectiveness of verification methods.

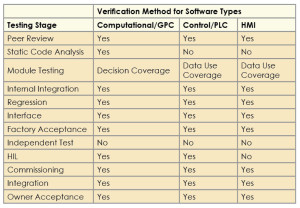

The DNV study focused on testing and verification methods required by ISDS. A literature review was conducted, and one result was the recognition that the effectiveness of verification techniques depends on the type of software being verified. Three categories of MOU software may be identified for purposes of selecting verification methods:

• Computational software running on a general purpose computer;

• Control software running on a programmable logic controller (PLC); and

• Human-machine interface (HMI) software running on a general purpose computer.

Table 2 summarizes the appropriate test strategies for the three classes of software based on published research. Note that PLC-based systems are not as common in most other industries as they are in offshore systems.

The testing and verification activities required by ISDS have generally proven effective in other industries. However, some anomalies were found in the applicability of the different methods:

• Good tools for static code analysis are readily available for general purpose computers. However, relatively few such tools are offered for PLCs, and none were identified for HMI software. The most serious problem detected by these tools is the “memory leak,” which typically does not occur in PLC and HMI software.

• The effectiveness of code coverage criteria varies according to software type. The simplest criterion, statement coverage, does not seem to help much as it is too easy to achieve. Nevertheless, suppliers should define appropriate coverage criteria and ensure that their internal testing meets these criteria.

• Published research does not support the contention that independent testing is more effective than diligent developer testing. However, independent testing provides a visible demonstration that the software was tested at least once.

• HIL testing has become widely accepted. As practiced in the MOU industry, HIL testing shares the property of independent testing in that it is an opportunity to ensure that the software is thoroughly tested at least once. In other industries, HIL testing is performed by the system integrator, who maintains the master product model. Even though HIL testing is not “independent” in this case, it is still a common requirement in the automotive and aerospace industries.

These results also suggest that some standards for software verification, such as ISDS, should be revised. For example, the requirement for independent testing in some cases may not be cost-effective if suppliers satisfy the other testing and verification requirements of ISDS.

The objective of ISDS is to establish minimum levels of software quality assurance for offshore systems without unduly constraining suppliers and builders. ISDS makes internal verification visible so that its practice can be made systematic and its effectiveness evaluated. DNV GL expects that ISDS will adapt and evolve as more is learned about the effectiveness of these techniques in the offshore industry, as well as in response to new technology and customer needs.

A lesson from this study was the need for increased emphasis on supplier testing before delivery to the shipyard. Currently, software verification often begins at FAT, potentially minimizing internal verification activities that have proved cost effective in other industries.

Because the MOU industry involves many different participants with different objectives, the industry is prone to sub-optimization, a situation in which each party is trying to minimize its own expense without regard for the effect on the overall project. In particular, operational responsibility is separated from newbuild responsibility. Items not resolved during the construction process can be left to be addressed in operations – or while waiting for operations to commence, after delivery. Consequently, standards, such as ISDS, that clarify and make visible the expectations for testing and verification by all involved parties can help to improve industry performance.

Acknowledgement: This study was sponsored by a joint industry project supported by Hyundai Heavy Industries, Statoil, Fred Olsen Energy, Rolls Royce, IBM and Panasia.

This article is based on a presentation at the 2014 IADC Advanced Rig Technology Conference, 16-17 September, Galveston, Texas.