Industry seeks new ways to unlock data, drive optimization

More companies turning to AI-enabled and open-source platforms, both to gain insights from available data and to deploy those insights at scale

By Stephen Whitfield, Associate Editor

The drilling industry is now inundated with more real-time drilling data than ever before, collected through new and existing downhole and surface sensors. However, many organizations have been frustrated in their efforts to realize wide-scale benefits from this plethora of data in terms of improved performance, cost and time savings, often due to the difficulties in sorting and analyzing that data.

To help organizations accelerate the value generation from data, a host of new technologies and platforms have been developed in recent years focusing on real-time data management, remote operations and artificial intelligence (AI)-enabled analytics. In an increasing number of collaborative projects, these technologies have shown value in helping operators and drilling contractors realize efficiency gains, whether by avoiding potential hazards in the well or by reducing nonproductive time (NPT) and invisible lost time (ILT).

Now, as the industry continues to adopt data-driven infrastructure into their workflows, technology developers are prioritizing solutions that can help contextualize data at scale, not just in isolated scenarios.

“You can learn from all the data coming in and recognize something as a situation you’ve seen before,” said Ted Furlong, Chief Data Scientist, Oilfield Services Digital at Baker Hughes. “You can point to the 30 other times you’ve seen this, point out the wells that you’ve done on a given campaign that are the most relevant. This kind of analytic application is really going to give you the type of confidence you need to get much better results.”

Minimizing inefficiencies

While Baker Hughes has been deploying data-focused systems for years, more recently the company has been specifically focused on incorporating data integration and data streaming mechanisms into advanced analytic applications. AI and machine learning are playing a huge role in this development. In June 2019, Baker Hughes and C3.ai announced a joint venture called BHC3.ai to develop digital technologies, combining the former’s oil and gas resources and the latter’s AI capabilities.

In April 2020, the companies deployed Drilling Hazard Prevention (DHP), an AI-enabled algorithm leveraging C3.ai capabilities with Baker Hughes’ physics-based models and domain expertise.

DHP combines historical field data, regulator data, operator data, service company data and rig data into a curated data model. Machine learning models train on daily drilling reports, mud logs and tool data to learn where stuck pipe, lost circulation and wellbore instability contribute to nonproductive time (NPT). These learnings are then integrated into the risk register and well plan, forming the basis for predictions of future trouble spots in similar wells that users can access in pre-well planning and real-time operations.

With AI and machine learning algorithms trained to target and rank relevant hazard information, Baker Hughes estimates a 60% reduction in the time that subject matter experts must dedicate to data analysis. In a recent North Sea project, the software helped field teams avoid an estimated four days of NPT by leveraging data from previously drilled wellbores with similarities to the planned well – in total, data from more than 640 wellbores was used for predictions.

In another North Sea well, the software reduced total analysis time for two unique wells from what would’ve been an estimated 20 hours to less than 3 minutes by isolating incorrect assumptions from an outdated geomechanical model within one minute of processing time.

The system also contains a live risk register that can be used between different stages of the well delivery process, providing live alerts that allow users to mitigate risks during drilling operations.

DHP is only available to a limited number of companies right now, but a full launch is anticipated later in the year. During this soft-launch period, the company is looking to demonstrate the system’s scalability among multiple wells covering large areas.

“It’s not so much the analytic scaling that we need to do, but it’s actually being able to bring all of the different data sources in and get the full data ingestion mechanism running for different fields,” Mr Furlong said. “It doesn’t do us any good to have one or two engineers load in data from a couple of wells.”

Baker Hughes has also developed its i-Trak Drilling Systems Automation software, which further streamlines and automates drilling processes at the rig site. One of the modules of this suite is i-Trak Automated Trajectory Drilling, a fully closed-loop system capable of accurately monitoring the wellbore trajectory in real time and deriving steering parameters/proposals to ensure the planned trajectory is achieved. If adjustments to the steering parameters are required to stay on track, the system automatically submits those steering commands to the downhole rotary steerable system.

Baker Hughes first deployed the system for Equinor in October 2019, on an offshore development well in the Norwegian sector of the North Sea. The software was used to drill two sections of the well – an 8½-in. pilot hole section and a 12¼-in. section – within 7 ft of the planned well path, without safety incidents or NPT. Equinor now uses the system on eight of its North Sea rigs.

Since the initial deployment of i-Trak, Baker Hughes has been working on expanding the service’s capabilities, using real-time, in-line fluids measurements to evaluate equivalent circulating density (ECD) and applying automation to streamline hole-cleaning procedures, thereby lowering stuck-pipe incidents. It is also working to build capabilities that enable automatic geosteering based on specific reservoir properties.

In addition to minimizing drilling inefficiencies and reducing NPT, operators are also looking to drive efficiencies with drilling rig utilization and work scheduling, said Hans-Christian Freitag, VP of Intelligent Software Solutions at BHC3.ai.

One of the applications developed under the C3.ai partnership, BHC3 Production Optimization, was launched in February 2020 and used to help a large operator in Eastern Europe optimize well intervention planning to achieve better field economics with its onshore wells. This was done by creating a unified data image to help the operator visualize and compare various optimized scenarios.

To create the data model, which represented data from two oilfields with over 1,000 wells combined, more than 3.8 million rows of data from 11 disparate data sources were ingested. With the data model as the foundation, the team created integrated asset models that incorporated multiple reservoir, well and infrastructure models from the operator and a financial economic model built by the BHC3 team. A new optimizer was then configured using a Bayesian modeling approach to run on top of the integrated asset models; it conducted over 50 machine learning experiments across six use cases.

The project, which took 12 weeks to complete, delivered a customized, scalable workflow that the operator could use to determine optimal well intervention scenarios. According to the operator, they saw a 15% reduction in operational asset-related costs, a 10% improvement in resource performance and a 5% improvement in well intervention efficiency.

Automated belief system

Since its entry into oil and gas in 2013, Intellicess has zeroed in on systems that can help operators clean, process and analyze drilling data. The company’s Sentinel RT is an AI-based engine that helps identify and understand drilling dysfunctions and optimal drilling parameters, including weight-on-bit (WOB) and RPM, torque and drag, washouts, stuck pipe, kicks and lost circulation. The system has been commercially available since 2015 and used by operators, such as Apache, on drilling operations.

It uses a Bayesian network, which is a probabilistic graphical model that represents a set of variables and their conditional dependencies – effectively, it calculates the likelihood of an event given various external conditions. Bayesian networks can be used for a variety of tasks, including anomaly detection, diagnostics and decision making in uncertain environments. With the Sentinel system, the network aggregates data from rig floor sensors, contextual information and predictions from physics-based models. It then determines a probabilistic belief system indicative of potential downhole issues.

As the Bayesian network connects possible sensed variables, the belief system’s algorithms can differentiate between a sensor and a process fault, identify outliers in data and missing data, and correct for sensor bias.

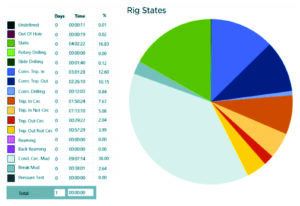

“‘Belief’ may be a strange word to use, but it is the basis for all of our decisions,” said Pradeep Ashok, Founder and CTO of Intellicess and a Senior Research Scientist at the University of Texas at Austin. “As humans, we look at data, we analyze patterns, and we develop sentiments at any given instant in time. With this system – by using a combination of physics-based models and data-based models – we’re forming a belief about the rig state, about bit bounce, stick-slip, whether a sensor has failed, etc. By looking at these models and the Bayesian network, you can understand what’s going on with the rig.”

Intellicess is currently working on a pair of updates for Sentinel RT. The first is a remote directional drilling application that would provide indications of potential issues ranging from poor toolface control to high friction and potential buckling in a given well. Trends would be identified from real-time data to determine the probability of an issue taking place. After identifying these issues, the application would provide suggestions for corrective actions. Intellicess is collaborating with a directional drilling company and an operator on this application, although no date has been set for a commercial launch.

Dr Ashok described the directional drilling application as a supplement to a company’s existing remote capabilities, lessening some of the workload of the professionals staffing a remote operating center. “In order to figure out what is going wrong, you have to look at different trends and put them together to detect all that is happening. For a person in a remote directional center, do we want them to look at those trends, or would it be easier to have an algorithm that figures everything out and basically tells them, for instance, that there’s the possibility of buckling? This frees the person in the data center from having to stare at the screen all the time and lets them handle situations by exception.”

The second update being developed is also an “event alert” feature. It alerts the driller about potential harmful events happening in the operation on the vertical strip chart. The objective would be to prevent unwanted events, such as stuck pipe or bit failure, from happening.

One project related to this feature, completed in 2020, focused on the tracking of hole cleanliness in real time, an important step to preventing stuck pipe events during well construction. For this project, Intellicess worked with Apache to develop a method for establishing a “belief” in hole-cleaning efficiency over time by examining key events during the drilling process, thus helping to characterize hole conditions.

These events were then related through duration and frequency to probabilistic features in a Bayesian network, to infer the probability of whether the hole-cleaning process had been good or poor. Events were also weighted by their age to ensure that current beliefs are not too strongly influenced by those that are far in the past.

Apache deployed this method on its North American land rigs as part of a real-time drilling advisory in 2019 and 2020. The software containing this belief analysis ran continuously, in real time, on an edge device during drilling operations. The rig crew had access to a display indicating real-time hole-cleaning effectiveness belief outputs, as well as a time-based chart that tracks beliefs over the life of the well.

The events and features found to be most relevant to quantifying hole cleanliness were the circulation rates during drilling, tight spots when moving the drill string, bit hydraulics and prolonged periods of inactivity. The Bayesian network model relied on pattern analysis event logging procedures that kept track of hole-cleaning events over time, which consolidated several hours of drilling information into relevant hold-cleaning features that could be processed quickly. The event logging procedures also allowed the Bayesian network model to operate with less RAM and CPU power compared with a traditional cuttings transport model.

Because poor hole condition can be extrapolated from the hole-cleaning efficiency belief, this method was used in tandem with Sentinel RT’s alerting system to notify rig personnel if hole conditions became poor enough to warrant corrective action. This alert contained possible causes for the poor hole condition based on the efficiency beliefs, which helped expedite troubleshooting.

The model was validated statistically by comparing hole-cleaning efficiency beliefs with known hole-cleaning issues in six Apache wells – four with known issues and two control wells without. The model was 84.8% effective at detecting known hole-cleaning issues across the wells analyzed. On each rig, the system was run continuously on an edge computing device during well operations, providing real-time hole-cleaning effectiveness belief outputs based on the drilling data received by the device.

The system was helpful not only in alerting the drillers whenever hole cleanliness deteriorated but also in providing the most likely causes of the deterioration. This provided the rig crews real-time guidance to make actionable decisions to avoid a stuck-pipe situation.

Open source and data management

Digital transformation initiatives in E&P often run into a wall when it comes to deploying at scale. That is because data is typically siloed and does not lend itself to easy cross-domain collaboration. Moreover, there is often a lack of understanding between companies on how to handle data for specific projects. There can also be a lack of trust in the data because its lineage is often not available.

One industry group, The Open Group OSDU (Open Subsurface Data Universe) Forum, is hoping to address these issues by improving how data can be accessed and analyzed. Formed in 2018 by a group of major operators, including Shell, BP and Equinor, the OSDU Forum is a vendor-neutral technology consortium that promotes and utilizes technology standards, digital technologies and best practices for the business and technical issues related to subsurface data.

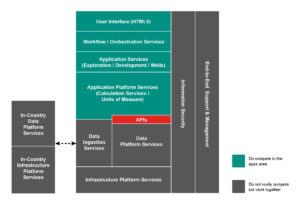

Its primary deliverable is the OSDU Data Platform, an open-source, cloud-based system designed to help maximize the use of subsurface and wells data in a standardized format. Companies can adopt the OSDU platform or build their own platforms using the OSDU platform framework, the data for which is available through a set of application programming interfaces (APIs).

The Mercury launch of the data platform in March makes it available for operational use by the wider oil and gas industry. Previously, the platform had only been available to a smaller number of OSDU members for experimental, learning and troubleshooting purposes.

The OSDU Data Platform is built on the concept of a defined data model. This means that true interoperability within the oil and gas industry will depend on having a critical mass of vendors and operators, many of whom are competitors, using the same model.

Johan Krebbers, who leads the OSDU Management Committee, said that convincing companies to work within the OSDU Forum has not been a problem so far, primarily because companies have recognized the difficulties in creating their own data platforms.

Moreover, there are many benefits for companies that build on the OSDU platform. First, there is a clearly defined framework of standards that ensure a company’s applications can run in any E&P digital environment that has also adopted the OSDU platform, said Mr Krebbers, who also serves as VP of IT Innovation and GM of Emerging Digital Technologies at Shell. Second, there will be a market and user base for applications developed to OSDU standards.

“If you don’t have the same standards, then you end up wasting a lot of time when you need to exchange data,” Mr Krebbers said. “If your data and my data are in different formats, there’s no money to be made. The margins in this market are slimmer and slimmer now, and companies are really focused on finding cost efficiencies. We can see those efficiencies by working together in a data space.”

Because the OSDU Data Platform currently deals only with subsurface data, one of the next steps is to incorporate additional data types, including those relating to well construction and well delivery. Work on this has been under way for a while.

Last year, the OSDU Forum partnered with the Drilling and Wells Interoperability Standards (D-WIS) initiative to create the OSDU D-WIS Project, which focuses on the development of industry standards and recommended practices that enable companies to connect devices, sensors and telemetry systems. This connectivity would allow users to share data and apps on new and existing rig control systems built within the OSDU Data Platform.

“D-WIS is important because we want drilling data to go into the OSDU platform so that it can be used for many other things. We want to make sure that drilling is fully integrated into our workflows,” Mr Krebbers said.

The D-WIS initiative began in 2018 as an effort to consolidate various industry efforts working on drilling automation, data quality and advanced analytics, including work done by the SPE Drilling Automation Technical Section and the Operators Group for Data Quality. The initiative aims to enable open and interoperable communication among key components and systems used in well construction, including rig/surface systems and downhole equipment. The goal is to leverage existing fit-for-purpose standards, communication protocols, recommended practices and data models into one system, enabling plug-and-play capabilities in the drilling and wells space.

Upon the creation of the OSDU D-WIS Program in 2020, 50 subject matter experts representing 25 companies from the OSDU Forum membership were placed into three work groups focusing on three separate work scopes,. The intent is to deliver standards and systems that can be used within the OSDU Data Platform and expand these capabilities into an edge computing platform.

One work group is focusing on the development of a rig-site connectivity framework (RCF) that would integrate data acquisition systems, controls and all data-dependent services on the rig. This group’s work scope includes the determination of data requirements for all phases of well construction, as well as the development of a semantic network for real-time drilling signals using common data models. It is also working on contextual data handling, defining the requirements for contextual data needs at the rig site, and enabling the dissemination and access of contextual data to users.

The second group is working on the development of a standardized interface that incorporates process supervisory control systems, rig operating systems and the RCF, so that data can easily be shared between users on a rig site regardless of the control system being used on a particular rig. The primary objectives of this group center around the functionality of the interface. It is working to define requirements for the interfaces for equipment control, how the interface will use commands across multiple pieces of equipment to execute rig processes, and to develop an architecture for automating rig processes and equipment via batch commands.

The third group is working on aligning the architecture of an OSDU D-WIS Edge system that incorporates the services developed by the other two groups. This group is securing edge hardware for this purpose and developing a systems requirements document that defines both the components needed to run the edge system and the plan for assembling these components into a workable system. This group will provide the reference specifications and implementations for different levels of hardware within the system.

The OSDU D-WIS program began testing proof of concept for the deliverables of the first two workgroups in June 2021, and it plans to have a live demonstration of the edge system by the end of this year. DC

Click here to watch an interview with Pradeep Ashok with Intellicess about innovations in data-driven performance optimization.