The art of data science: Industry hones in on reliability, ease of use

Recent efforts touch on standardization, aggregation from disparate sources, automated processing and customizable delivery to users

By Stephen Whitfield, Associate Editor

For years, the oil and gas industry has looked for ways to translate the massive amounts of data it generates from its rigs and wells into actionable insights. Companies knew that improving the data’s accuracy, reliability and ease of access would provide significant value, allowing them to drill faster, cheaper and safer wells. However, they faced challenges, like the lack of a uniform definition for what high-quality data even is. Trying to navigate the data needs of different companies working in different areas has been another major challenge for service providers.

“The phrase ‘data quality’ has been spinning so much around the industry in the last few years, and it is not easy to neatly wrap up the conversation,” said Lars Olesen, VP of Product and Technology at Pason Systems. “People use the term data quality ubiquitously, but, depending on their perspective, they might be referring to the calibration of rig sensors, nomenclature of WITSML tags, gaps in the data, or one of infinite other issues. It is a journey to find what is important to the customer.”

But what’s not ambiguous is that data quality is more important than ever. With recent advances in data science, service providers are seeing an increased uptake of downhole and surface data for automation and analytics applications. The last couple of years have seen these service providers launch new systems, or participate in industry initiatives, designed to standardize and optimize data. The goal is to make it easy for personnel at the rig or in the office to understand the data and maximize productivity from their wells.

“When we think about automation, what are some of the key areas where we see a benefit?” asked Matthias Gatzen, Executive Digital and Automation Director at Baker Hughes. “It’s repeatability: We’re able to deliver consistent, predictable performance for every single well every time. It’s efficiency: We’re streamlining our operations with efficient drilling, fewer people on board. It’s accuracy: We’re developing automation services that really deliver precise well placement, and that drives efficiencies and improved hydrocarbon recovery.”

Standardized naming conventions

As one of the largest providers of data services to the drilling industry, Pason Systems is well aware of its current challenges. The company said it organizes customer data quality needs around a theme of “simple, reliable and secure.” In the company’s experience, technology has advanced sufficiently so that conversations with customers now center on reliability and ease-of-access more than accuracy and precision. This is of paramount importance to the industry – operators and drilling contractors want easy and reliable ways to receive delivery of their data – so Pason continues to look for new opportunities to simplify the data ingestion process for its customers.

Participating in industry standardization projects is among various ways that Pason is working to simplify data ingestion for drilling automation systems. For example, last year the company joined an ad hoc committee of operators, drilling contractors, service companies and data acquisition vendors to recommend standardized nomenclature and equations for the use of mechanical specific energy (MSE) in drilling operations. The committee was led by Fred Dupriest, professor of engineering practices at Texas A&M University.

Used as a metric for drilling efficiency, MSE is a mathematical calculation of the energy used per volume of rock drilled. The lack of standardized nomenclature for MSE has led to inconsistencies in the limiter redesign process, which was developed by Mr Dupriest during his career with ExxonMobil and which many teams use today to continually improve drilling performance.

Rig-site personnel and remote operations engineers who watch MSE traces from an electronic drilling recorder (EDR) typically choose from a list of two to four traces with MSE-related names. The users rarely know the equations or real-time measurements associated with each trace, which then affects their ability to diagnose the root cause of bit inefficiency.

Bob Best, Director of Cloud Solutions at Pason, said the concern with this inconsistency among data scientists and drillers has increased as MSE has become a key element in many automated optimization schemes. The inconsistencies of MSE values calculated in real time, or shared in large datasets, affect the industry’s ability to develop useful analytics or automate rig control platforms, he explained. It could also make it more difficult for drillers to make data-driven decisions at the rig site.

“A data scientist or anybody looking at multiple wells– or even looking at one well – will struggle to find what they are looking for if the naming is inconsistent from well to well, or rig to rig. It is a waste of time, and the waste multiplies when doing a group-level analysis on many wells. Now they must find the same piece of data with a different name from hundreds of wells. There are substantial time savings upfront from simple standardization,” Mr Best said.

The ad hoc committee formed last year identified two primary uses and nomenclature descriptions of the MSE concept. Total MSE is the total mechanical energy expended in the drill string and the bit – this has previously been called surface MSE, system MSE and rig MSE. Downhole MSE, also known as bit MSE or motor MSE, is the mechanical energy expended at the bit. These two forms of MSE evolved from the original Teale equation, which was popularized almost 20 years ago.

“The introduction of mud motors and horizontal well trajectories with significant wellbore friction meant that the original MSE equation had to be reformulated into two different measurements,” said Stephen Lai, Manager of the Drilling Systems Research team at Pason. “To obtain meaningful results, it is helpful for a data scientist to be able to specify which MSE is being analyzed and to know that it has been calculated the same way on all the wells,” he added.

When a motor is not installed, total MSE is calculated using surface weight on bit, top drive torque and top drive speed. When a motor is present, however, the calculation becomes more complex because the string and the bit are traveling at different RPM and with different torsional force. The original Teale equation cannot be used as it only accommodates one RPM and torque. The committee found that, to calculate energy correctly, the equation must be reshaped so that the drill string RPM is multiplied by the string torque and the bit RPM is multiplied by the bit torque. The two independent variables can then be added to obtain the total work.

The real-time calculation of downhole MSE by an EDR requires the input of bit torque and speed. These variables can be generated in one of three ways. If a motor is in use, they can be obtained from the surface flow rate, motor differential and the specific motor’s speed and torque factors. If the MWD measurements are available, the values can be ported directly into the EDR. If there is no downhole data available, a theoretical bit torque can be estimated by modeling the drill string’s rotational drag and subtracting this value from the top drive torque.

Pason incorporated the standardized equations and nomenclature into its software this spring. Mr Olesen is optimistic but said industry buy-in will pace adoption, as some companies transition from their own naming conventions.

“People often overlook the nature of a drilling rig; it is a remote place where a lot of people with diverse backgrounds have come together only temporarily to do a job,” Mr Olesen commented. “It will take time to educate the importance of separating the two types of MSE. It is a process of continuously answering how the new nomenclature will benefit people who are critical to the drilling workflow but have a long list of other priorities and responsibilities.”

Visualization data for multidisciplinary use

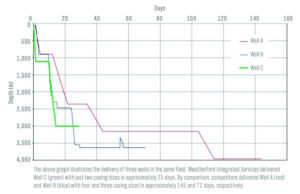

Weatherford’s recent activity in data science has focused on developing what the company calls a “solutions-oriented digital approach” to managing wellsite operations. Its CENTRO system, launched in March 2020, was built with this goal in mind.

CENTRO is a real-time well construction optimization software platform that aggregates high-resolution data from multiple sources, including the rig site, offset well databases, geological and geophysical data, and third-party applications. Within seconds, it transfers the data across a secured network to a centralized repository, either hosted on the cloud or a company’s on-premise server. From there, personnel can access relevant project information at any time. The platform offers visualization tools, in addition to the ability to generate automated, condition-based alarms for early risk detection for unexpected downhole events.

Manoj Nimbalkar, VP – Digital Solutions at Weatherford, said the platform allows users to compare real-time data with simulation models to avoid potential issues during drilling. Real-time data transmission during drilling and completions operations can provide rapid insights into changing conditions. A customizable multi-well, multi-domain operational dashboard enables experts to manage these changing conditions on each well.

Mr Nimbalkar also noted that the ability to visualize data from disparate sources, and customize that view, creates tremendous value. “No engineer wants to see the data exactly as another engineer sees it. They want a standard format, but they’ll want to customize it based on how they will utilize that data. You have your standard real-time data coming in, your torque and drag models, and your hydraulics and other engineering models. If you want to see your wireline logs, you can drag and drop them on the screen. I think that is the beauty of the multi-domain visualization,” he said.

Last year, Weatherford collaborated with a major operator in Comalcalco, Mexico, to drill a deviated, J-type onshore well. In previous attempts, the operator failed to reach the planned total depth, experienced three stuck-pipe events and accrued more than eight days of nonproductive time (NPT) because of challenges with different pressure regimes over a single drilling interval.

Weatherford used an integrated services solution incorporating the CENTRO platform with its Victus intelligent MPD and Magnus rotary steerable system. Before the job, the Weatherford Integrated Services team connected the CENTRO platform with operator data fed in by rig sensors, and it customized 15 real-time operational dashboards for different disciplines. It tested data transmission with Weatherford and third-party software and trained project personnel on functionality. A real-time operations center (RTOC) supported data transmission and availability.

During the job, CENTRO supported data aggregation and secure data storage. It provided web-based, real-time visualizations of the well to a multidisciplinary unit comprised of Weatherford specialists from drilling, MPD and drilling fluids. It also enabled real-time data monitoring, with access to more than 100 users from Weatherford product lines, RTOC support personnel, the operator and three external vendors.

In the end, the operator was able to achieve total depth with less than 5% NPT and zero well control incidents. The well was drilled and completed 42 days faster than the operator’s best offset well, saving 62% in rig time, valued at $1.81 million. In addition, the operator reported a 32% higher production rate than expected.

Mr Nimbalkar said Weatherford will continue innovating the CENTRO platform to enhance its predictive capabilities. Later this year, new machine learning algorithms will be rolled out, augmenting traditional engineering models that predict when stuck-pipe incidents may occur. Predictive algorithms to optimize the rate of penetration during drilling are also under development.

“These are critical considerations for our customers because these are the problems they are trying to solve daily,” Mr Nimbalkar said. “I see many companies and startups developing machine learning algorithms in a rush to provide machine learning that does not provide real solutions to the operators’ challenges. That’s why we are focused on solving operators’ biggest challenges.”

Automated processing of sensor data

Baker Hughes’ recent efforts around data analysis and processing include updating its i-Trak drilling automation system to better leverage downhole sensor data. Last year, the company introduced a navigation module for the system, designed to automate steering within the reservoir. The module relies on interactive software simulations of the downhole environment, generated from sensor data, to provide a real-time picture and automatically suggest an optimal path for the wellbore.

Mr Gatzen said the module enables users to place the wellbore more accurately. Also, by automating the processing of the downhole sensor data needed to visualize the wellbore’s path, users can drill much faster.

“We’re really leveraging our downhole sensor data,” he said. “We have high-tech sensors downhole, and we’re pairing that up with the automatic processing of the data that’s provided by those sensors. In effect, what you’re doing is automating your steering application within the reservoir. It’s a huge opportunity with a growing technology.”

The automated reservoir navigation module has shown positive results for operators in the field so far. In the second half of 2021, an operator working in the North Sea used the module to help drill 8,000 m in a single run, the longest section that operator has drilled in that field. The navigation module was used in concert with Baker Hughes’ AziTrak deep azimuthal resistivity measurement tool to geosteer into the most productive reservoir zone. The operator saw an increase in drilling speeds, going from an average of 60 m/hr in prior runs to 80 m/hr with the module.

Baker Hughes also added a module to the i-Trak system last year that uses downhole sensor data to detect at the earliest possible time the hard calcite stringers interbedded in a given formation. The company developed a set of algorithms that automatically detects stringers and informs personnel of the actions needed to either mitigate the issues caused by drilling through a stringer or avoid the stringer altogether.

“If you hit the stringers as you’re drilling, you see a deviation in the well path, and if you don’t catch that quickly enough, ultimately, you’ll have to backtrack and maybe clean your wellbore so you can complete it afterwards – if you have a micro dogleg, it can be very hard to complete a well. So, this detection module can be valuable,” he said.

In late 2021, the company deployed the stringer detection module for a North Sea operator that had struggled to drill through hard calcite layers within softer sand formations. The calcite layers were causing deflection of the wellbore, knocking the bit off its preplanned path upon contact. Prior to the technology’s introduction, the operator was spending an average of 2.5 hours on reaming for every 1,000 m drilled. After using the stringer detection module, that figure went down to 50 minutes per 1,000 m. On its most recent well, drilled in May, the operator averaged only 20 minutes per 1,000 m.

Beyond the software updates, Baker Hughes has been looking at utilizing data to improve asset integrity management protocols. In March, the company announced a collaborative project with C3.AI, Accenture and Microsoft on industrial asset management solutions for the energy and industrial sectors, including the oil and gas industry. As part of the project, Baker Hughes will create and deploy asset management systems to help improve the safety, efficiency and emissions profile of industrial machines, field equipment and other physical assets. It will also work on developing capabilities to optimize plant equipment, operational processes and business operations through improved uptime, increased operational flexibility, capital planning and energy efficiency management.

C3.AI partnered with Baker Hughes and Microsoft last year on the Open AI Energy Initiative, an open ecosystem of AI-based systems for the energy industry. It will build a flexible AI application to support the deployment of Baker Hughes systems at scale as part of this project. Microsoft will provide a cloud infrastructure to store the data generated from the facilities and equipment, as well as high-performance computing systems to help process the data.

“At the end of the day, when you’re thinking about industrial asset management, the focus is on driving the performance,” Mr Gatzen said. “By bringing in these different partners – us with the digital and domain knowledge, C3.AI with the flexible AI application layer, Accenture with the product design and Microsoft with the secured cloud infrastructure, we have a new way to leverage data for our customers.” DC

Interested in ensuring proper stewardship of your rig sensors? Click here to get the newly launched IADC Rig Sensor Stewardship Guidelines.