Why people don’t see hazards: a human performance perspective

Understanding hindsight bias can help organizations to make necessary changes to investigation process, training for leaders and HSE professionals

By Marcin Nazaruk, Baker Hughes

“Failure to recognize the hazard” is commonly listed as a root cause in incident investigations in the oil and gas industry. Investigators, leaders and safety professionals often ask, “Why didn’t they see what was going to happen?” or “How could they have not recognized the hazard? It was obvious that it would lead to X.”

The answer appears to be simple: complacency, laziness and not paying attention. Workers just did not try hard enough, and they must not have followed procedures or conducted a good risk assessment.

How, then, do we prevent the reoccurrence of similar incidents in the future? Popular practice makes it quite simple, right? Discipline and retrain personnel. After all, everyone knows that fear is the best way to make people pay attention. The cycle repeats until the next incident occurs.

To advance this discussion, let’s shift our thinking by asking a different question: Why do we tend to explain why incidents happen by simplistically stating the worker failed to see the hazard?

Consider a simple example. A worker is working at a height of 10 ft. They did not take a drops prevention device with them and worked without securing their tools. They completed the job successfully without any issue or non-conformances and moved on to their next activity.

How would you describe the worker’s behavior on a scale from 1 (completely acceptable) to 10 (completely unacceptable and blameworthy)?

Now, let’s consider the same scenario but with a slight change.

A worker was working at a height of 10 ft. They did not take a drops prevention device with them and worked without securing their tools. The worker dropped their 2-lb hammer onto a pathway and severely injured another worker.

How would you describe the worker’s behavior on a scale from 1 (completely acceptable) to 10 (completely unacceptable and blameworthy)?

Was there a difference in your answers?

Typically, people will rate the same behavior more harshly and blameworthy if it resulted in a negative outcome. Knowledge of the outcome changes how we interpret the past, and it even changes our memory without us noticing.

Let us consider a more personal example.

Think about your work situation today – all the projects and efforts in which you are involved – and think about what could go wrong. You can likely list several possibilities, but it’s unlikely that you could predict any one scenario with high accuracy, because the future is uncertain and ambiguous. Yet, despite that, you are doing your best using your knowledge, skills, limited resources and information.

Then, imagine that your boss has just called you and, with an irritated tone of voice, asked you to immediately see him or her to talk about an unexpectedly large financial loss on one of your projects. Let’s take a pause here and reflect on that for a moment. Imagine, if you really had received this message right now, what would you report as the causes of the loss?

When someone becomes aware of the outcome of an event or situation, they tend to misremember what they knew about that event, even if it happened just minutes ago.

Could you easily point to specific situations in the recent past that might have led to this? Could you easily identify root causes of this failure? Why was it vague and difficult to predict one minute, but in the next minute you could point to the causes with ease?

If your partner or a close friend, upset by this situation, accused you of “failing to recognize weak signals,” how helpful would that be to truly understanding what happened?

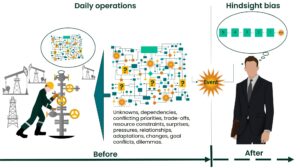

The knowledge of the outcome is immediately integrated into what we know about the situation, and it changes how we interpret the past. This effect is called hindsight bias.

Dr Baruch Fischhoff, an expert specializing in how people make decisions under uncertainty and the first researcher who identified this effect, says hindsight bias “can seriously restrict one’s ability to learn from the past.”

Similar experiments were conducted with court judges, medical doctors and many other professional groups and led to fascinating findings:

- After becoming aware of the outcome, people misremember what they knew about the situation, even if it was just minutes ago;

- After finding out the outcome, people tend to see the outcome as more probable than they saw it before knowing the outcome (you may hear people say “I knew it was going to happen”);

- People are unaware that this effect is taking place in their minds and how it clouds their judgement; and

- People judge behaviors leading to a negative outcome more harshly and blameworthy than the very same behaviors leading to a neutral or positive outcome.

Dr David Woods from Ohio State University says in his book on human error: “Simply telling people to ignore outcome information is not effective. Telling people about hindsight bias and to be on guard for it does not seem to be effective either.”

So, what are the practical solutions?

The most effective method is to consider alternatives to the actual outcome. Thinking back to our example of the worker at height, we would ask our worker after the event to list as many potential negative outcomes related to this activity as possible and walk us through how each could have unfolded. This helps to remove the illusion that it was easy to see and predict. The list could include:

- Difficulties with getting the right tools and potentially damaging the bolts, due to the warehouse admin double hatting and not being available when needed to provide tools;

- Lack of information on the model of equipment and torque parameters due to out-of-date procedures could lead to increased vibration and equipment damage in the long term;

- Doing work in the middle of a turnaround and having 50 other contractors on site who do not know the rules and walk through the barricaded area, as those rules were not covered in their pre-job briefing;

- Simultaneous operations impacting logistics, as well as competing priorities with equipment (e.g., three teams needed the same tools but only one set is available on site);

- Not completing the job on time could result in halting production and being blamed for financial losses;

- Drops prevention devices were not available on site and would have had to be bought, which would have taken a few days; and

- Supervisor’s leadership style: “If you don’t know something, you are an idiot,” which makes it impossible to ask questions as they would give overtime to somebody else.

Having insight into these challenges and constraints changes our perspective again, demonstrating that many things could have gone wrong and people were inevitably doing their best given the uncertainty and situational constraints. The insight obtained by asking a series of questions around “how potential negative outcomes can unfold” is much more helpful in addressing contributing factors and preventing future issues.

Also, having a bigger picture around the context and other people’s contributions to the situation makes the desire to blame and punish irrelevant, as now we would have to discipline 20 or more people, including leaders from different departments, engineers, planners and support functions, e.g. procurement, contractor management, etc. It would be impractical and unhelpful.

Finally, in light of those insights, the “failure to recognize the hazard” as a root-cause conclusion becomes a superficial label, hiding complexity and constraints stemming from multiple interdependent teams trying to achieve their sometimes competing objectives. This can include expected thoroughness, which requires time, while also completing the job within a short time frame due to operational dependencies and implications, i.e. another team must start their activity in the same location.

So, how do we explain behavior, if not by saying that people failed to recognize the hazard?

One popular approach in society is the so-called rational choice, which originated in the 1700s from economist Adam Smith, who tried to explain how people decide to spend their money. This approach argues that behaviors result from conscious decisions based on careful analysis of all available options and having access to all information with no time constraints. Therefore, if all those efforts are applied, the decision must lead to positive outcomes.

From this point of view, if somebody makes a decision that results in an undesired outcome, it is easy to claim they did not put enough effort into obtaining the needed information and analyzing the situation. This, once again, leads to blame with the ineffective advice to “try harder.”

However, there are hidden assumptions underpinning this approach:

- Reality can be objectively described by anyone.

- All information is available.

- There are no constraints, e.g. time, tools, people, space, etc.

- All potential outcomes can be predicted.

Unfortunately, in everyday life in complex organizations, none of these assumptions are true.

Dr Sidney Dekker from Griffith University’s Safety Science Innovation Lab states in his book, “Drift into Failure”: “Perfect knowledge is impossible. Instead, decision making calls for judgements under uncertainty, ambiguity and time pressure. Options that appear to work are better than perfect options that never get analyzed. Reasoning in complex systems is governed by people’s local understanding, by their focus of attention and existing knowledge. People do not make decisions according to the rational choice theory. What matters for them is that the decision (mostly) works in their situation.”

After all, what workers are doing makes sense to them at the time. It is called local rationality, the idea that people are rational but not in the absolute (global) sense that they have all information or resources and infinite capacity to analyze that information. Instead, they are “locally” rational, in that they are doing reasonable things given their knowledge of the situation, their task and business objectives.

Therefore, to explain behavior after an event, we need to move away from the questions “why did it happen?” or “why didn’t they identify the hazard?” Rather, we need to ask “how did it make sense to them at the time?” and “what were the constraints in their situation?”

Practical solutions

What can organizations do to benefit from the insights described? How can they systematically reduce the impact of hindsight bias, given that informing people about it is not effective?

Changes may have to be made to the investigation process, leadership training and competencies of the HSE professionals. Leaders and investigators need to practice techniques to minimize the intensity of the hindsight bias.

Critical-thinking skills training and effective questioning skills will be essential to learn about the site-specific “local rationality,” in order to reduce the fallacy that the worker is the cause and that the incident could have been predicted and prevented by the worker if only they had tried harder.

Additionally, investigators may need guidance on how to avoid drawing the conclusion that “failure to identify the hazard” was a root cause. As per industry guidance on human factors in incident investigations (IOGP Report 621), an investigation should offer a narrative describing the unfolding mindset of individuals whose actions and decisions contributed to the incident over time. The individuals include leaders, engineers, procurement, competency and others.

Finally, as an industry, we need to be proactive and not wait for an incident before learning. Human performance is about pro-active optimization of work systems in order to maximize effectiveness and minimize risk. By talking to workers about what makes their tasks difficult and how they depend on others, we can quickly learn about many issues that can contribute to incidents in the future. DC